The Ultimate Guide to Technical Search Engine Optimization

Are you looking for the ultimate guide to technical search engine optimization? Look no further! This blog post will provide all the necessary information on optimizing your website from a technical perspective.

We’ll cover topics such as technical SEO, why it’s essential, and various fundamentals to consider when optimizing a site. Additionally, we’ll discuss things like Site Structure & Navigation, Crawling, Rendering and Indexing, Thin & Duplicate Content, plus more in this comprehensive guide so you can achieve top-notch results with your website’s SEO performance.

Let’s get started by diving into “the ultimate guide to technical search engine optimization”!

What is Technical SEO?

Technical SEO optimizes a website to improve its visibility in search engine results pages (SERPs). It involves analyzing and improving both on-page and off-page elements, such as content, HTML code, meta tags, and other technical aspects of the website, and ensuring that all website components are optimized for search engines to crawl it effectively.

Why is Technical SEO Important?

Technical SEO is an essential part of any website’s success. It helps search engines properly find, crawl, and incorrectly sites so potential customers can see them.

With technical SEO, a website may reach its full potential regarding visibility and ranking on the SERPs (Search Engine Results Pages).

When it comes to why technical SEO is essential, there are several important reasons. First, if your site isn’t optimized for crawling and indexing by search engine bots, then you won’t show up in the SERPs!

This means no one will ever see your content or have access to what you offer. Secondly, having a well-structured website with straightforward navigation makes it easier for users to find what they need quickly, which leads to a better user experience overall.

Finally, fast-loading pages and eliminating thin or duplicate content also help improve user experience and increase rankings on the SERPs due to improved page speed scores from the Google PageSpeed Insights tool.

Technical SEO Process

It involves optimizing the technical aspects of a website to ensure that search engines can crawl, index, and rank it appropriately.

Technical SEO fundamentals include creating an XML sitemap, setting up redirects, using canonical tags, optimizing page speed and mobile usability, ensuring crawlability and indexability, and more.

#1. Audit Your Preferred Domain

It’s essential to audit your preferred domain, as search engines will prioritize this version of your website.

You can select a preferred domain by telling search engines whether you prefer your site’s www or non-www version to be displayed in the search results.

For example, if you want www.yourwebsite.com to be prioritized over yourwebsite.com, you should set it up accordingly so that all variants (www, non-www, HTTP, and index.html) permanently redirect to that URL.

Use Canonical Tags

If Google identifies and selects a version for searchers on its own, then make sure it’s the one you want them to see first and foremost!

Otherwise, use canonical tags to specify which page should take precedence when there are multiple versions available for users or crawlers alike – this way, they know which page is “the original” and where their SEO value should go instead of being dispersed across different URLs with similar content on them.

Broken Links Or Redirect Chains

Additionally, watch for broken links or redirect chains, as these can negatively affect user experience and prevent crawlers from properly indexing pages; both issues could lead to lower rankings in SERPs (Search Engine Results Pages).

Internal Links

Ensure all internal links point directly at their intended destination without any additional steps needed – otherwise known as ‘shortcuts’ – because no one likes taking detours when trying to get somewhere quickly!

Crawl Errors

Pay attention to crawl errors like 404s (Page Not Found) since these indicate dead ends for both users and bots alike – leading them down a rabbit hole with no return trip back home!

Fixing such issues helps ensure everyone has smooth sailing throughout their journey around your website while also helping boost SEO performance by providing everything works correctly under the hood, so everything gets noticed during indexation processes too!

#2. Implement SSL

SSL, or Secure Sockets Layer, is a layer of protection between the web server and browser that helps keep user information secure. It’s denoted by a domain beginning with “https:” instead of “http:” and a lock symbol in the URL bar.

In 2014, Google officially declared that SSL would be considered a ranking factor.

To get started with SSL, you must migrate non-SSL pages from http to https. Here are the steps:

Once you have implemented SSL across your entire website, it’s essential to monitor its performance regularly using tools like Google Search Console or Screaming Frog SEO Spider as part of an ongoing technical SEO audit process – this will help ensure everything is running smoothly and securely at all times!

#3. Improving Page Speed

How long will it take a website visitor to load your website? Six seconds is a generous estimate. According to some data, increasing the page load time from one to five seconds increases the bounce rate by 90%. You don’t have a second to waste, so making your website load faster should be a top priority.

To ensure that your web pages load quickly, there are several steps you can take.

Optimize Images

Optimizing images is one of the most effective ways to improve page speed. Images tend to be large files, so reducing their size without sacrificing quality will help them load faster on browsers.

Compressing images using tools like TinyPNG or JPEGmini will reduce file sizes significantly while preserving image quality.

Additionally, ensure all images are appropriately sized for their intended use; only upload a full-size image if it will be displayed as a thumbnail on the webpage!

Minifying HTML, CSS, And JavaScript Code

Another way to boost page speed is by minifying HTML, CSS, and JavaScript code.

Minification removes unnecessary characters from source code, such as white space and comments that aren’t necessary for rendering the content correctly in browsers but adds extra bytes when downloading files over the internet.

Tools like Closure Compiler or UglifyJS can help with this process automatically without requiring any manual coding changes from developers.

Caching Resources

Caching resources is another great way of improving page speed since cached versions of files don’t need re-downloading every time someone revisits a webpage after initial loading has occurred once already – this helps save bandwidth usage too!

CDNs

Finally, consider using Content Delivery Networks (CDNs), which serve content from multiple geographically distributed locations around the world instead of just one server location – this ensures that users receive content quicker than if it was done from just one place due to its proximity effect whereby data travels shorter distances between user devices & CDN nodes resulting in faster delivery times overall!

#4. Making Your Website Crawlable

Making your website crawlable is an essential part of technical SEO. Here are some tips for making sure your website is as crawlable as possible:

Create An XML Sitemap

An XML sitemap provides a roadmap for search engine crawlers to follow when they visit your site. Once complete, submit your sitemap to Google Search Console and Bing Webmaster Tools. Remember to keep your sitemap up-to-date as you add and remove web pages.

It should include links to all essential pages on the site, including blog posts, product pages, category pages, etc., so crawlers can quickly locate them and add them to their index.

Maximize Your Crawl Budget

Crawl budget refers to how often Googlebot visits your site and how much time crawling each page.

To make sure you’re getting the most out of this resource, you need to optimize page loading times by compressing images or using caching plugins where necessary; reduce duplicate content; eliminate broken links; and create shorter URLs with fewer parameters to keep bots from spending too much time on any one page.

Optimize Your Site Architecture

Your website’s architecture should be organized in such a way that it makes sense both for users and for search engines alike—think “flat” rather than “deeply nested” structures (i.e., try not to have more than three levels between the homepage and any other page).

This will help ensure that bots don’t get lost while navigating through different site sections or miss important pages altogether due to excessive clicks required to reach them from the home base (the homepage).

Set A URL Structure

Make sure all URLs are short yet descriptive enough so visitors know what they’re clicking into before they even land on the page itself—this will also help boost click-through rates from SERPs since people tend to trust sites with clear titles more than those without them (plus it’s easier for bots!).

Additionally, consider adding categories or tags if appropriate, as these can provide additional context around certain types of content, which may be helpful during searches related to it down the line!

Add Breadcrumb Menus

Breadcrumbs are small navigational elements usually located at the top bottom webpage, indicating the current location user relative rest entire website hierarchy…

They provide visual cues about where the person currently stands & enable quick backtracking of previous steps taken leading up present position – a great tool aiding both humans & machines alike in finding their way around complex systems!

Utilize Robots.txt

Robots txt files allow web admins control over which parts of their websites are crawled by search engine spidersbots – essentially giving us power decide what gets indexed & what doesn’t depending upon specific needs at a given time…

This file also helps prevent potential issues like duplicate content penalties being incurred due to multiple versions same thing showing up across various directories within the domain name itself!

Use Pagination

Pagination allows the break of large chunks of information into smaller bite-sized pieces, thereby helping improve the overall usability experience.

For example, instead of having one long list of products displayed on a single screen might divide items into several separate pages according to category type, price range, etcetera, thus allowing customers to narrow down options based on the preferences criteria specified quickly.

Not only does this make browsing easier, but it also helps avoid overwhelming visitors with too many choices once you go!

Check SEO Log Files

Finally, always check server logs regularly and monitor progress made in crawling activity – look for error warnings and notices regarding particular requests made by the bot to determine whether anything could hinder its ability to explore real domain name questions properly.

If you spot something amiss, then immediately address the issue promptly and ensure no further disruption occurs in future visits same source code again soon after the initial discovery was first made! Ask a developer or use a log file analyzer like Screaming Frog to access your log files.

#5. Indexing Your Website

It’s the process by which search engines discover and add pages to their index so that they can be included in search results.

To ensure that your site is indexed correctly, there are a few steps you should take:

Unblock Search Bots from Accessing Pages

If they can’t access them, they won’t be able to index them. You can do this by checking the robots.txt file for any blocked URLs or directories and ensuring that no “noindex” tags have been added to any pages you want indexed.

Remove Duplicate Content

Search engines prefer to avoid duplicate content because it dilutes their ability to provide accurate and relevant results for users’ queries.

So make sure you remove any duplicate content from your site before submitting it for indexing – otherwise, you could end up with multiple versions of the same page being indexed instead of just one!

Audit Your Redirects

This will help ensure that only a one-page version gets indexed correctly and avoid any potential confusion caused by conflicting signals sent out by different versions of a page found in search engine indexes.

Check The Mobile-Responsiveness Of Your Site

If your website isn’t mobile-friendly by now, you’re already behind the curve. Google indexed mobile sites first in 2016, prioritizing the mobile experience over the desktop experience. That indexing is now enabled by default. To stay on top of this critical trend, use Google’s mobile-friendly test to determine where your website needs to improve.

Fix HTTP Errors

Any time an error occurs while trying to access a web page (e.g., 404 Not Found), this sends out negative signals about the quality and usability of your website – something which isn’t good news when trying to get listed in Google’s SERPs!

So make sure all broken links are fixed ASAP; use tools such as Screaming Frog SEO Spider or Xenu Link Sleuth if necessary – these will crawl through every link on your site, looking for errors that need fixing quickly before submitting for re-indexation again later down the line.

PageSpeed

Page speed also plays a vital role in getting sites appropriately listed; slow loading times mean fewer visitors who stick around long enough to read what’s written there.

So optimizing images, videos, etc., where possible & reducing server response times wherever feasible also helps here!

#6. Rendering Your Website

Rendering your website is a critical part of technical SEO. It involves optimizing how search engine bots and users interact with your site, ensuring they can access all content quickly and efficiently.

Server Performance

If you’re experiencing server timeouts or errors, it’s essential to troubleshoot them as soon as possible.

These issues will prevent users and bots from accessing your web pages, resulting in poor rankings on SERPs (Search Engine Results Pages).

To identify potential problems, use a web crawler like Screaming Frog, Botify, or DeepCrawl to perform an error audit of your site.

HTTP Status

HTTP errors are another issue that can block access to your pages for both users and bots alike.

You should also use a web crawler to check for these types of errors – if any are found, you’ll need to resolve them before continuing with other optimization tasks.

Load Time & Page Size

To ensure optimal performance, try reducing page size by compressing images and minifying HTML, CSS, and JavaScript files where necessary.

JavaScript Rendering

Search engines may struggle to render JavaScript-heavy websites correctly – this means they won’t be able to index all content correctly, so make sure you optimize accordingly!

For example, create separate HTML snapshots for each version of the page (desktop vs. mobile) so that Googlebot has something easier to work with when crawling through the codebase.

Orphan Pages

An orphan page doesn’t have any internal links pointing towards it – this makes it difficult for search engine spiders (and therefore users!) to find their way around the website effectively, so make sure there aren’t too many orphans floating around out there!

Page Depth

The depth at which pages appear on a website affects how easily they can be accessed by search engine spiders – aim for no more than three clicks away from the homepage; otherwise, chances are good that some pages won’t get indexed properly due to lack of visibility within the sitemap structure itself!

Redirect Chains

Redirect chains occur when multiple redirects point towards one another in succession – this causes latency issues because browsers take longer loading resources from different URLs rather than just one source directly…so keep an eye out for these too!

#7. Improve Ranking From A Technical SEO Standpoint

Regarding improving ranking from a technical SEO standpoint, internal and external linking are two of the most critical factors.

Internal links help search bots understand how your pages relate to each other, providing context for where they fit in the grand scheme of things.

This makes it easier for them to crawl and index your content, which can lead to higher rankings. External links also play an important role by giving search bots a “vote of confidence” for your site.

Quality backlinks from reputable sources tell search engines that you have high-quality content worth crawling and indexing, which can boost your rankings significantly.

On the flip side, low-quality or too many backlinks from one source can hurt your rankings.

You should also ensure any existing links are up-to-date and pointing toward valid URLs; fixing or outdated links will not do anything positive for you regarding SEO performance!

#8. Improve Your Clickability

When it comes to improving your clickability, follow the below-listed steps.

Structured Data

Structured data helps search engines understand the content of your page and can help you win SERP features like rich snippets or knowledge panels.

This can be done through Schema markup, a type of code that helps search engine crawlers better interpret what’s on your website.

For example, if you have an online store selling clothes, adding structured data for product reviews will ensure those reviews appear in Google’s SERPs when someone searches for “[your brand] clothing review.”

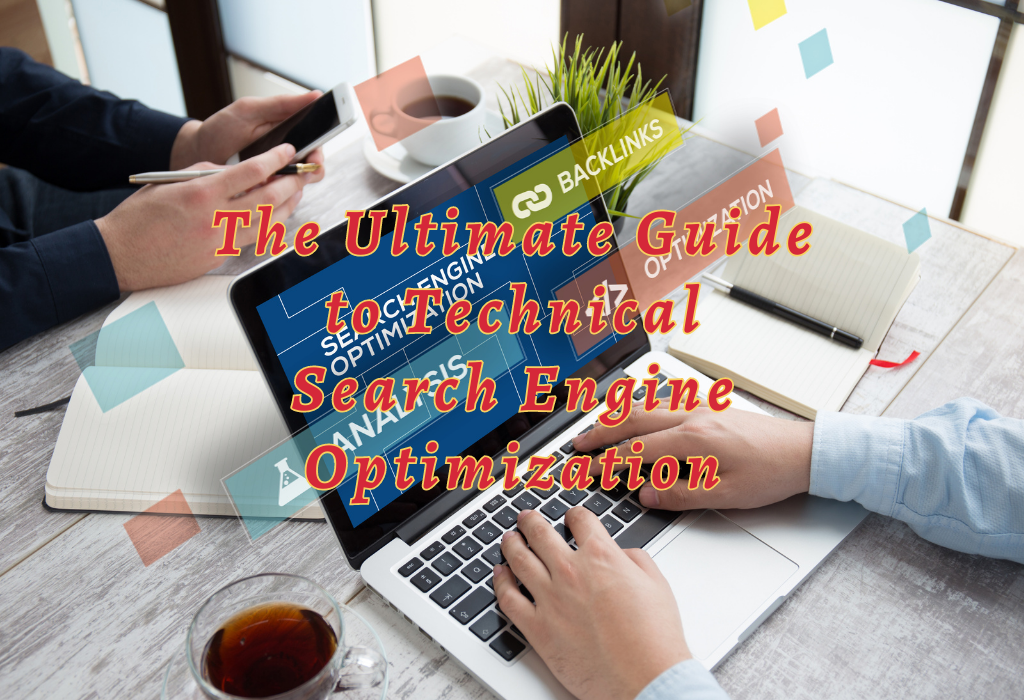

Optimize For Featured Snippets

Another way to improve clickability is by optimizing for Featured Snippets (also known as Position Zero). These answers appear at the top of the SERP above all other organic results and often include images or videos along with the text. According to Google, providing the best answer to the searcher’s query is the only way to win a snippet.

Google Discover

Don’t forget about Google Discover – formerly Google Feed – which shows personalized content based on user interests across various platforms such as mobile devices and desktops.

Keeping track of how users interact with your content within Discover is essential since it could lead more people directly back to your site without having gone through a traditional search query first!

Optimizing titles and meta descriptions specifically for Discover can draw attention from potential readers who may not have found you otherwise.

Conclusion

It helps to ensure that your site can be found and indexed by search engines while providing a better user experience for visitors. The ultimate guide to technical search engine optimization offers a comprehensive overview of the fundamentals of this critical aspect of web development.

From understanding what it is and why it’s essential to learning about key elements such as site structure and navigation, crawling, rendering and indexing, thin content, and duplicate content issues, as well as page speed optimization tips – this guide has everything you need to get started with technical SEO today!

Are you looking to improve your website’s visibility and ranking in search engine results? Then this guide is the perfect resource for you. With tips on Search Engine Optimization, Site Architecture, Web Site Usability, and User Experience (UX), it provides an easy-to-follow roadmap to get your site noticed by potential customers. Unlock the secrets of Technical SEO today – start making more informed decisions about how best to optimize your website now!